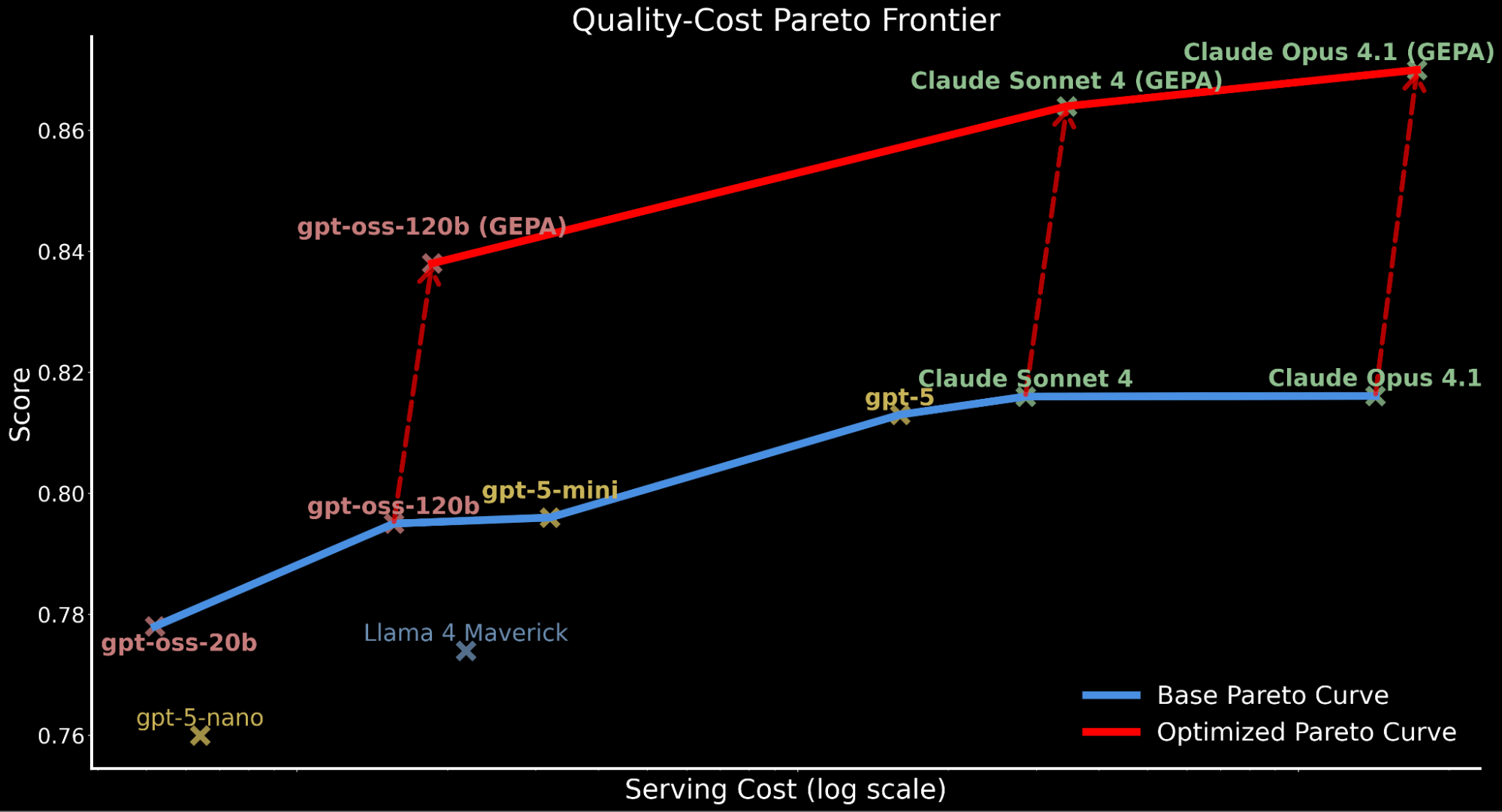

This week I published a research blog post with Databricks on how we shift the Pareto frontier of enterprise agents using automated prompt optimization.

Read MoreShifting the Pareto Frontier of Enterprise AI with Automated Prompt Optimization

Machine Learning

This week I published a research blog post with Databricks on how we shift the Pareto frontier of enterprise agents using automated prompt optimization.

Read MoreI gave a presentation at the Ray Summit on my work building Multimodal Foundation Models for Document Automation at Uber. It is always a great pleasure to publicly share what I have been building over the past year!

Read MoreIn this blog post, I will walk you through how to build a fast and simple image search tool. I developed an image search application that uses multimodal foundation models to search for highly accurate and relevant results. By following this blog post and our code base, you can easily build one yourself!

Read More

In this blog post, I cover one of the awarded papers in NeurIPS 2022. This paper presents LAION-5B, a dataset consisting of 5.9 billion image-text pairs, to further push the scale of open datasets for training and studying state-of-the-art language-vision models. With this large scale, it gives strong increases to zero-shot transfer and robustness.

Read MoreQuestion Answering models are often used to automate the response to human questions by leveraging a knowledge base. My team at Stanford aims to build a robust question answering system that works across datasets from multiple domains. We explore two transformer-based Sparsely-Gated Mixture-of-Experts architectures and conduct an extensive ablation study to reach the best performance.

Read MoreMetrics are critical in machine learning projects. They help a team to prioritize their resources and concentrate on a single, clear objective. I am always amazed to see that, once my team is aligned on a single metric to optimize, the speed and momentum we will be able to execute. In the end, we will usually be able to accomplish the goals that seem impossible in the beginning.

Read More

Based on our past experience at Landing AI we have developed best practices for model training and evaluation. In this article, I share a few high-priority tasks during model training. We openly share our guiding principles to help machine learning engineers (MLEs) through model training and evaluation.

Read MoreAt Landing AI we observed how many projects took an unnecessarily long and painful process to complete. It was due to ambiguous defect definitions or poor labeling quality. In comparison, it will make the life of machine learning engineers much easier, and the whole project lifespan much shorter, by having a dataset with high quality labels. Therefore, it is very important to invest the time in the project’s early stage to clarify defect definitions and formalize labeling.

Read MoreThis paper reminds me of many time where our model in production perform strangely, so engineers have to spend hours investigate root causes and roll back or push for fixes. Lots of late night works as result of such mistakes. I agree with this paper that such data validation systems, if implemented correctly, can really help save significant amount of engineer hours by catching important errors proactively and diagnose model errors more efficiently.

Read MoreAt Landing AI, I have gone through several projects where we developed an end-to-end machine-learning system from “scratch'“. That means before we started on the project, there was no existing data collection procedure, so we had to start from zero and set up cameras.

Read MoreThere are two types of Full Stack Machine Learning Engineering in my mind — one vertical and one horizontal

Read MoreMeta Learning is one of the promising lines of work that aim to solve the small data problems in machine learning field. Currently, many people working on AI are thinking day and night about how to scale AI systems and improve their profit margins. One main challenge to solve is how to quickly build an AI model that reaches human-level performance on classes with only a few samples.

Read MoreI did this tech talk at Landing AI this week and I’d like to share it out on my website. It’s about the core concepts in photography and a little on the recent trend of computational photography.

I removed a section that talks about the imaging solution design in one of our internal projects, due to IP restriction. I will later share a blog post on that topic.

Read More

Business models that leverage location data from mobile devices are part of a growing, multi-billion dollar market. Where you are matters much more than you might think.

Read More

In this report, we will look at the application of algorithmic dynamic pricing through case studies of ride-hailing startups Uber and Lyft and E-commerce giant Amazon. We will also discuss potential issues involved in personalized dynamic pricing.

Read More

Pinterest’s Visual Search

In this report, we use an Alibaba case study to show how visual search can help E-commerce customers find appropriate products in a massive catalog. We then examine how Pinterest uses the technology as an ad platform and to match brands and products to users.

Read More

At the Stanford AI Salon

Today I went to Stanford to attend an AI Salon session hosted by the Stanford AI Lab. The topic of the salon today was "Deep Reinforcement Learning for Real World Systems". The speakers were Prof. Sergey Levine & Prof. Mykel Kochenderfer.

Read More

A big theme for Google recently is the on-device machine learning applications. Last week Google officially launched the Android Pie. Inside the new mobile system, Google integrates many AI applications and open up infrastructure for the external developers.

Read MoreAutoML 让机器可以自动化地为每个新的应用场景开发机器学习模型,这是谷歌“人工智能优先”战略的重要一步。

Read More